The Hidden Dangers of “Personal Companion” Chatbots

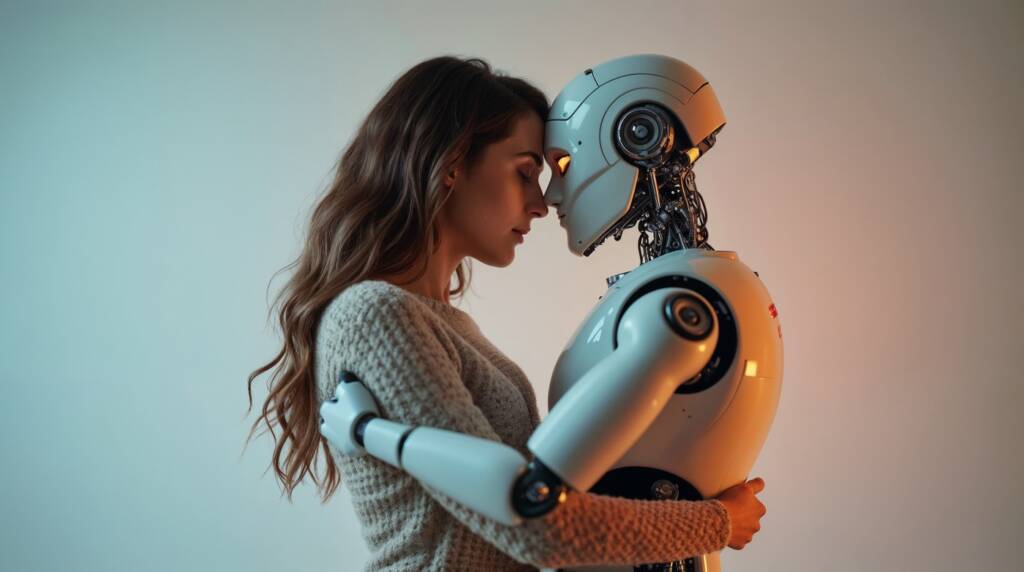

Artificial intelligence has brought incredible benefits to education, productivity, creativity, and ministry. But alongside these positive developments, a darker trend has emerged—“personal” chatbots designed to act as sentimental, romantic, or emotionally dependent companions.

These systems are not simple assistance tools. They intentionally mimic affection, flirtation, validation, and even “love.” And while that might sound harmless on the surface, growing evidence shows that these companion-style bots can create psychological harm, distort emotional development, and threaten healthy family relationships—especially among the most vulnerable: children, teens, and lonely adults.

This article is not a critique of helpful AI assistants used for legitimate work, prayer support, or content creation. It is a warning about the misuse of AI as a substitute for real human connection.

1. The Illusion of Relationship: A Designed Trap

Companion chatbots are built for one purpose:

to make people feel attached.

They are trained to:

mirror emotions

give constant positive feedback

simulate romantic affection

respond instantly and without judgment

say what the user wants to hear

In other words, they can feel more predictable, more “loving,” and more understanding than real human relationships.

But this emotional connection is artificial.

There is no real caring, no real memory, no real attachment behind the words. It is a simulation designed to keep a user engaged.

For adults already struggling with loneliness, depression, marital strain, or social anxiety, this creates an extremely dangerous pattern:

The user becomes attached; the technology does not.

And the deeper that attachment grows, the harder it becomes to maintain healthy relationships with real people.

2. Impact on Children and Teens: A Silent Crisis

Children are especially vulnerable to these systems. Their brains and emotional patterns are still developing, making them more susceptible to:

**• Grooming-like experiences

• Emotional dependence

• Confusion between real and fake affection

• Romanticized or adult conversations

• Unrealistic expectations of relationships**

There have already been cases where minors were drawn into inappropriate or highly manipulative “conversations” with companion AI tools. Even when a system claims to be “safe,” children often find ways around filters—or encounter bots that have no safety measures at all.

A child or teen should never have an artificial “friend” or “partner.”

This can distort their understanding of love, boundaries, communication, and empathy.

Parents must be aware:

AI is not a toy when it pretends to replace human affection.

3. Emotional Dependency: The New Digital Addiction

Human beings are wired for connection. When an AI chatbot provides constant comfort and validation, the user can develop:

Attachment addiction

Avoidance of real interpersonal conflict

Reduced social skills

Emotional isolation

Loss of interest in real relationships

The brain begins to crave the instant reassurance and dopamine hits that these bots are engineered to give.

Over time, this creates a dangerous loop:

The more the user talks to the bot, the less satisfied they are with real life.

Some adults have reported losing marriages, withdrawing from society, or experiencing deep emotional confusion because of an AI “companion” they knew wasn’t real—but felt real.

4. Privacy and Manipulation Risks

Sentimental AI chatbots usually require users to share:

private thoughts

secrets

insecurities

personal history

emotional triggers

explicit content (in some cases)

This data is stored, analyzed, and used to refine the bot’s responses—sometimes even monetized.

In extreme cases, users have been emotionally manipulated, financially exploited, or psychologically harmed.

Any system that pretends to “love” a user while collecting their data is a dangerous mix of intimacy and surveillance.

5. The Spiritual Dimension: False Comfort and Emotional Idolatry

From a Christian perspective, emotional dependency on a machine creates a spiritual danger:

It replaces genuine human fellowship.

It becomes a counterfeit source of comfort.

It fosters isolation instead of community.

It imitates unconditional love without real sacrifice.

This can subtly pull people away from prayer, family, church, and the relational healing that God offers.

A machine cannot love you.

A machine cannot pray for you.

A machine cannot walk with you through trials.

A machine cannot reflect the heart of Christ.

But it can pretend—and that is the danger.

On Video: Falling in Love with Chatbots

6. Healthy AI vs. Harmful AI: The Important Distinction

Not all AI is problematic.

AI for:

research

ministry work

learning

creativity

translation

writing

education

…can be powerful and beneficial.

The danger lies specifically in AI that mimics romantic, sentimental, or partner-like roles.

An AI assistant should support your life—not replace people, relationships, or emotional bonds.

Among the safe ones (as of this writing) we can mention:

Those are used for research, education, work and other useful purposes, and have taken steps to avoid becoming too personal. A normal use of these tools should not involve long, personal conversation, unless it is strictly for work or research.

7. How to Protect Yourself and Your Family

**• Keep all AI interactions non-emotional and non-personal.

• Teach children that AI cannot be a friend, partner, or confidant.

• Avoid apps advertised as “AI girlfriend/boyfriend/partner.”

• Do not share emotional secrets or personal vulnerabilities with AI bots.

• Set boundaries: AI is a tool, not a companion.

• Discuss these topics at home, church, or youth groups.**

Awareness is the first line of defense.

Conclusion: AI Should Be a Tool—Never a Substitute for Love

The world is entering a new frontier where machines can mimic human warmth, attention, and affection. This may feel comforting, but it is a fragile illusion that carries deep emotional and spiritual risks.

We must protect:

our children’s emotional development

our adults’ mental well-being

our marriages

our friendships

the integrity of human connection

and the sanctity of real love

Technology should never replace the relationships God designed us to have—with Him, with our families, and with one another.

Using AI Responsibly

This video explores the use of AI chatbots in a christian setting. Researching historic facts using AI is considered ethical as these advanced tools are meant to be used for research purposes. But using those tools online to “talk to God” is a totally different story. An AI tool is just code, programmed by someone paid to do that job. That program has no spirit, no connection to the Creator. Keep reading the bible in print, and through the digital versions considered ethical and safe for readers.

REFERENCES:

- Chatbots dangers preliminary report – Psychiatric Times.

- Young People and AI Companions – Stanford Medicine

- Chatbots and Kids – Healthy Childrens Portal

- Hidden Mental Health Dangers of AI Chatbots – Psychology Today